It may well be the most frustrating and inspiration-crushing problem in home recording sessions: hearing the recorded source back a fraction of a second later than it is played.

And yet, it’s incredibly common and tricky to work around. In this blog post, we discuss what digital audio latency is, what causes it and how it could be resolved.

When recording in a DAW, the total round-trip latency is the time it takes for an input signal to go from analog to digital, into the DAW and out again, and back from digital to analog. If you rely on the DAW for software monitoring, processing or even a synth (in the case of a MIDI input), a high delay will severely affect the musician’s performance and result in an unpleasant to impossible session.

What is latency?

To go from the analog world into the DAW, and vice versa, a few things have to happen all of which take a tiny but real amount of time. The inbound signal is sampled or “measured” many times per second, and converted to a digital representation. These numbers are queued and sent from the audio interface to the computer, where they are again queued until the operating system has time to pass them on to the application that’s listening for it, usually a DAW. In the DAW, the signal can be processed by effect plug-ins, mixed with the rest of the session, and sent back out to the audio interface to monitor on speakers or headphones.

The amount of data passing through for every second of audio will mainly depend on sample rate, bit depth, and a number of channels. Simply put, the sample rate is the number of times per second the analog signal is measured. That measurement can range from precise (encoded in 2 bytes or 16 bits per sample, so 65,535 possible values) to VERY precise (encoded in 3 bytes or 24 bits per sample, so almost 17 million possible values). For a given connection and protocol, sending a higher volume of data will take longer.

Connection types

The type of connection and data transfer protocol between your audio interface and computer will naturally affect the time it takes for the data to arrive where it needs to be. For instance, PCIe-cards are hard to beat in this regard because they plug directly into the motherboard, and that makes them attractive to professional studios. Thunderbolt often has the benefit of reading directly from the memory without buffering, resulting in a drastic reduction of latency. But as audio is relatively low-bandwidth compared to for instance video or large file transfers, even USB 2.0 can transfer audio efficiently enough so that this in itself wouldn’t be much of a bottleneck.

Drivers

You may only really think about drivers when unpacking and installing a new audio interface. But they have an incredible impact on the total system latency, that’s not always easy to quantify. First of all: what is a driver? It is a computer program that other computer programs (like your DAW) use to communicate with an external device (like your audio interface). Because they are so close to the hardware, they are highly dependent on the type of computer and its operating system. The default Windows driver is famously inadequate for professional applications, so using a more specialized driver like ASIO is usually recommended by the interface manufacturer. On macOS machines, the built-in CoreAudio driver is regarded as a more professional tool. But many interfaces have their own drivers with the intent to provide even faster audio transfer.

Buffers

In data transfer contexts (which is what audio interfacing is), a buffer is a piece of digital memory that temporarily stores incoming signal before it’s ready to go out again when the transfer is not instantaneous. In either direction, there is usually a buffer in the audio interface and another one in the driver.

The buffer size can usually be set in the DAW. If you want to decrease your system’s delay, just reduce the buffer size! Of course, nothing is that easy: if your buffer size is TOO short, the necessary processing won’t happen in time and you’ll get clicks and dropouts. The reason for this is that the processor deals with many other things including updating graphics, running plug-ins, and, yes, checking email.

At a higher sample rate, the same buffer size will result in lower latency. For instance, at 44.1 kHz, 512 samples take 12 milliseconds; at 96 kHz it’s closer to 5 milliseconds. But again simply increasing the sample rate will not solve much, as this means more samples have to be processed in less time, and you will run into glitches sooner.

Putting these delays into perspective, if another musician or their amp is two meters away from you, the sound takes about 6 milliseconds to reach your ear. Any total latency of around that duration shouldn’t bother you much. But when you get to around 12 milliseconds, experienced ears will start to notice.

What can you do?

You can’t improve what you can’t measure. An easy way to test the real round-trip latency is to do a true analog “loopback”, where you play a signal from the audio interface output and record it to another track via the audio interface input. Then you can see the actual delay in the DAW.

First off, the better your CPU and memory are, the faster the processing power can be. The same thing is true for audio interfaces: high-quality audio interfaces with good drivers will do the processing more efficiently. So investing in a good setup is the most expensive but likely most effective way to reduce system latency.

Beyond that, most DAWs have the option to “freeze” a track, which means that they are printed including all effects so that plug-ins don’t need to run. The effect settings are stored so you can unfreeze them later to make changes.

You may want to consider putting very computationally expensive plug-ins, such as high-end reverbs, on a single effect bus rather than as an insert on each individual track that you want to affect. If the effect is sufficiently linear, the result will sound the same when you send the tracks to that bus at the appropriate level.

Finally, you could limit yourself to a handful of lightweight effects during the recording stage, and add the rest at mixing when the round-trip delay is far less critical.

It also helps to ensure any unnecessary applications are closed.

How can you reduce latency with your audio interface?

As above, the latency you experience when listening to a recording as it is happening is essentially the consequence of audio going into the digital domain and back out. So a rather obvious workaround would be to NOT go into the digital domain. This could be achieved with an analog mixer with sends or per-channel outputs. The main output of the mixer feeds the recorded signals as well as the rest of the music or click to speakers or headphones. The channels you want to record are sent from the mixer to the audio interface inputs. As a result, you hear the recorded signal with zero delay, and can play completely in sync with the audio interface output. The DAW accounts for the delay so your recorded part should appear completely in sync.

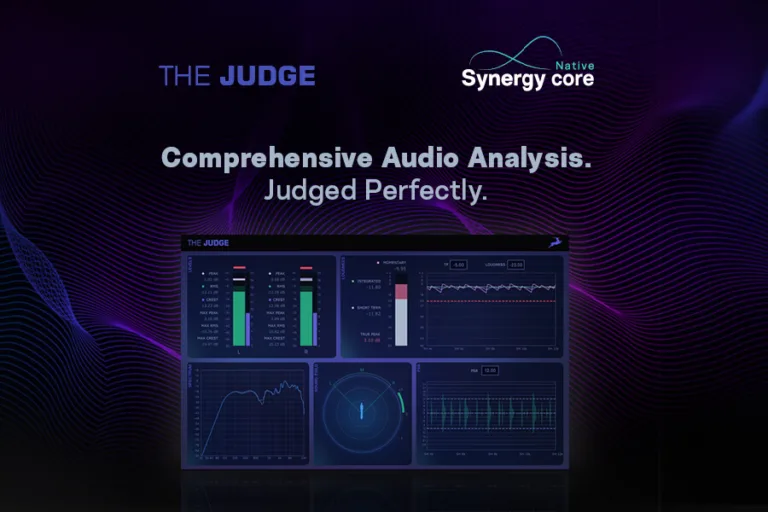

The downside of the simple analog latency-free monitoring approach is that the performer hears their own signal completely dry, which can be uncomfortable and distracting. For instance, a singer might prefer to hear their voice with a little bit of reverb, a bass or guitar player through an amp emulation, a voice talent through EQ and compressor, … If you want to avoid the delay that comes with software monitoring and processing or the significant cost of a good analog mixer, your best bet is hardware monitoring and processing. Audio interfaces like our Synergy Core line will do the processing and monitor mixing on-board, with optimized chips that don’t have all the other jobs that general-purpose CPUs do. You will get the best of both worlds: fast, high-quality effects on the monitored and/or recorded signal, and ultra-low latency.

It has to be said: another possibility is to not monitor at all! In some cases, for instance, with vocalists or acoustic instruments, it may simply not be necessary to hear yourself again. You might wear headphones just on one ear, so that you clearly hear yourself with the other ear. But of course, in the case of an electric or electronic instrument, you’ll rely on amplification to hear yourself, and it can be unnatural to hear yourself dry and completely detached from the mix.

Reducing or bypassing latency can be one of the most liberating experiences you can have as a studio owner or performer. Fortunately, these days it is within reach for digital and hybrid home studios too. Try it, and you’ll never go back!